How to Upload Database to Aws Rdb

Migration of Oracle database to AWS is a common task many different Enterprises nowadays. And there're many different ways of doing that. In this article, nosotros will summarize manual steps and commands, which are helping to piece of work with Oracle Data Pump in Amazon RDS Oracle.

If y'all're interested in DB migration topic, it might be worth checking out our commodity "CloudFormation: How to create DMS infrastructure for DB migration".

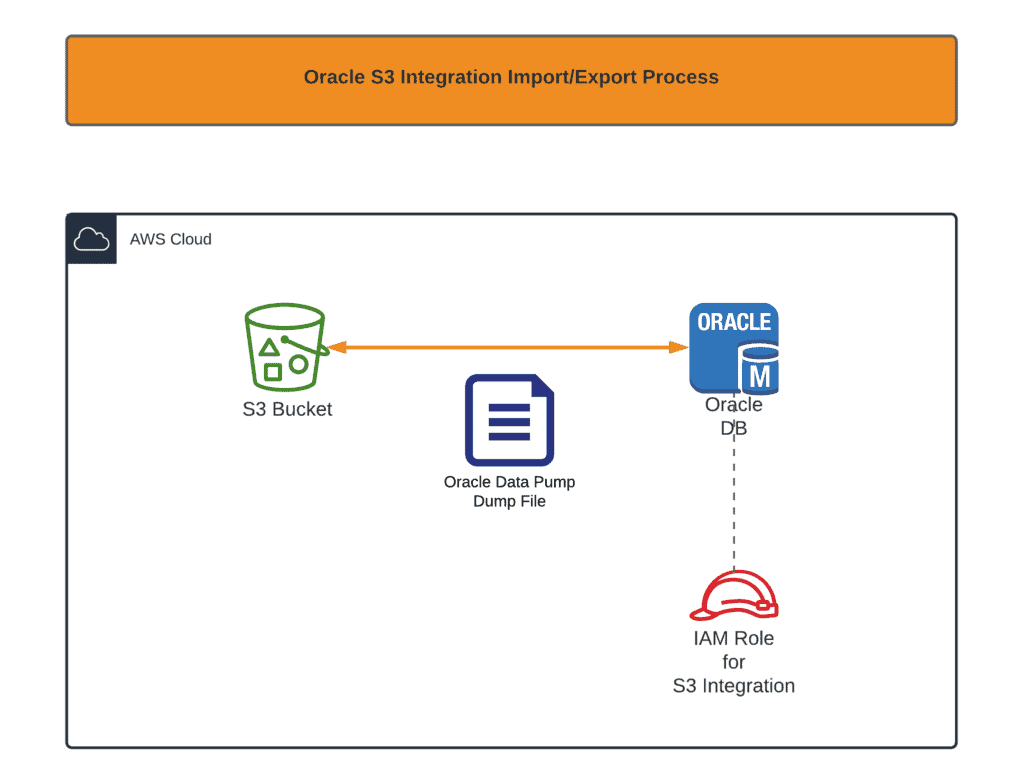

Enable S3 integration

Outset of all, you need to enable Oracle S3 integration. Whole procedure is completely described at official documentation. Equally a short summary it provides your Oracle RDS case with an ability to get access to S3 bucket. For those of y'all, who're using CloudFormation to do that, here'southward some snippets:

DbOptionGroup: Type: "AWS::RDS::OptionGroup" Properties: EngineName: oracle-ee MajorEngineVersion: "12.2" OptionConfigurations: - OptionName: S3_INTEGRATION OptionGroupDescription: "Oracle DB Instance Option Group for S3 Integration" DbInstanceS3IntegrationRole: Type: "AWS::IAM::Function" Properties: AssumeRolePolicyDocument: Version: "2012-10-17" Statement: - Effect: Allow Principal: Service: rds.amazonaws.com Activeness: "sts:AssumeRole" Path: "/" Policies: - PolicyName: S3Access PolicyDocument: Version: '2012-x-17' Argument: - Upshot: Permit Action: - s3:GetObject - s3:ListBucket - s3:PutObject Resource: - !Sub "arn:aws:s3:::${DbDumpBucketName}" - !Sub "arn:aws:s3:::${DbDumpBucketName}/*" At present, if you're planning continuosly create and delete RDS Oracle instance using CloudFormation, it is amend not to attachDbOptionGroup to your Oracle instance. CloudFormation will not be able to delete your stack because:

- Automated RDS case snapshot is created during RDS provisioning time.

- RDS case snapshot depends on Option Group.

As a outcome Option Grouping is at your stack will be locked by automatically created DB snapshot and y'all volition not be able to deleted it.

Importing Oracle Information Pump file

Create all nesessary tablespaces if needed. Each can be created past:

CREATE TABLESPACE MY_TABLESPACE DATAFILE SIZE 5G AUTOEXTEND ON Adjacent 1G; Create necessary schema(user) and grant it the following permissions:

CREATE USER MY_USER IDENTIFIED BY "MY_PASSWORD"; GRANT UNLIMITED TABLESPACE TO MY_USER; GRANT CREATE SESSION, Resources, DBA to MY_USER; Change USER MY_USER QUOTA 100M on users; Also, for every tablespace yous created:

ALTER USER MY_USER QUOTA 100M ON MY_TABLESPACE; To initiate dump file copy from S3 bucket, execute the following query:

SELECT rdsadmin.rdsadmin_s3_tasks.download_from_s3( p_bucket_name => 'your_s3_bucket_name', p_s3_prefix => '', p_directory_name => 'DATA_PUMP_DIR') AS TASK_ID FROM DUAL This query returnstask-id, which tin can exist used to track transfer status:

SELECT text FROM table(rdsadmin.rds_file_util.read_text_file('BDUMP','dbtask-.log')) Supervene upon<task_id> with the value returned from the previous query.

You may listing all uploaded files using the following query:

select * from table(RDSADMIN.RDS_FILE_UTIL.LISTDIR('DATA_PUMP_DIR')) guild by filename; Note: sometimes it'south required to delete imported file. Yous may do information technology with the following command:

exec utl_file.fremove('DATA_PUMP_DIR','your_file_name'); As before long as the file transfeted from S3 saucepan to Oracle instance, y'all may start import job:

DECLARE hdnl NUMBER; Brainstorm hdnl := DBMS_DATAPUMP.Open( operation => 'IMPORT', job_mode => 'SCHEMA', job_name=>null); DBMS_DATAPUMP.ADD_FILE( handle => hdnl, filename => 'your_file_name', directory => 'DATA_PUMP_DIR', filetype => dbms_datapump.ku$_file_type_dump_file); DBMS_DATAPUMP.ADD_FILE( handle => hdnl, filename => 'imp.log', directory => 'DATA_PUMP_DIR', filetype => dbms_datapump.ku$_file_type_log_file); DBMS_DATAPUMP.METADATA_FILTER(hdnl,'SCHEMA_EXPR','IN (''your_schema_name'')'); DBMS_DATAPUMP.START_JOB(hdnl); Terminate; Supplantyour_file_name andyour_schema_name with your values.

To check condition of your chore execute the following query:

SELECT owner_name, job_name, operation, job_mode,DEGREE, country FROM dba_datapump_jobs where land='EXECUTING' Read import log file to get more than information about errors or unexpected results:

SELECT text FROM table(rdsadmin.rds_file_util.read_text_file('DATA_PUMP_DIR','imp.log')) Exporting Oracle Data Pump file

Note: The post-obit dump query may non export ALL your tables, if some tables may not exist extent allocated. So, you need to generate a script to alter those tables:

SELECT 'Change TABLE '||table_name||' Allocate EXTENT;' FROM user_tables WHERE segment_created = 'NO'; Run generated queries before executing dump query to get a full dump.

To export Oracle Information Pump file you need to consign your DB starting time:

DECLARE hdnl NUMBER; BEGIN hdnl := DBMS_DATAPUMP.Open( operation => 'Export', job_mode => 'SCHEMA', job_name=>null); DBMS_DATAPUMP.ADD_FILE( handle => hdnl, filename => 'your_file_name', directory => 'DATA_PUMP_DIR', filetype => dbms_datapump.ku$_file_type_dump_file); DBMS_DATAPUMP.ADD_FILE( handle => hdnl, filename => 'exp.log', directory => 'DATA_PUMP_DIR', filetype => dbms_datapump.ku$_file_type_log_file); DBMS_DATAPUMP.METADATA_FILTER(hdnl,'SCHEMA_EXPR','IN (''your_schema_name'')'); DBMS_DATAPUMP.START_JOB(hdnl); Stop; Replaceyour_file_name andyour_schema_name with your desired values.

To check status of your job execute the following query:

SELECT owner_name, job_name, performance, job_mode,DEGREE, country FROM dba_datapump_jobs where state='EXECUTING' Also, you may readexp.log during export performance:

SELECT text FROM tabular array(rdsadmin.rds_file_util.read_text_file('DATA_PUMP_DIR','exp.log')) As presently equally export finishes, y'all may copy your exported file to S3 bucket:

SELECT rdsadmin.rdsadmin_s3_tasks.upload_to_s3( p_bucket_name => 'your_s3_bucket_name', p_prefix => '', p_s3_prefix => '', p_directory_name => 'DATA_PUMP_DIR') Every bit TASK_ID FROM DUAL; And again, to check upload status, execute the following query:

SELECT text FROM table(rdsadmin.rds_file_util.read_text_file('BDUMP','dbtask-.log')) Importing regular exported file

Sometimes you may exist dealing with dumps, which been exported by Oracle Export utility. Their import is not that much efficient, but as we take no any other option..

Create nesessary tablespace if needed:

CREATE TABLESPACE MY_TABLESPACE DATAFILE SIZE 5G AUTOEXTEND ON Adjacent 1G; Create schema (user) for imported database:

create user MY_USER identified by ; grant create session, resource, DBA to MY_USER; alter user MY_USER quota 100M on users; Grant your user all necessary permissions, allowing to import DB:

grant read, write on directory data_pump_dir to MY_USER; grant select_catalog_role to MY_USER; grant execute on dbms_datapump to MY_USER; grant execute on dbms_file_transfer to MY_USER; Next, y'all need to install Oracle Instant Client to Amazon Linux EC2 case.

Download the post-obit RPMs:

- Base of operations.

- Tools.

And install them:

sudo yum -y install oracle-instantclient19.six-basic-19.half-dozen.0.0.0-1.x86_64.rpm sudo yum -y install oracle-instantclient19.6-tools-xix.6.0.0.0-i.x86_64.rpm Now yous may import your dump file using Oracle Import utility:

/usr/lib/oracle/19.6/client64/bin/imp \ MY_USER@rds-instance-connection-endpoint-url/ORCL \ FILE=/opt/my_exported_db.dmp Total=y GRANTS=y Every bit before long as process finishes I definitely recommend to export your DB using Oracle Data Pump to accept an ability to import information technology much faster next fourth dimension.

Common errors

Oracle S3 integration non configured:

ORA-00904: "RDSADMIN"."RDSADMIN_S3_TASKS"."UPLOAD_TO_S3": invalid identifier 00904. 00000 - "%s: invalid identifier" *Crusade: *Activeness: Error at Line: 52 Column: 8 You demand to apply correct Selection Group or check S3 integration part.

Related articles

- Using Terraform to deploy S3->SQS->Lambda integration

- CloudFormation: How to create DMS infrastructure for DB migration

- AWS Step Functions – How to manage long running tasks

- [The Ultimate Guide] – AWS Lambda Real-world use-cases

- How to integrate Jenkins with CloudFormation and Stride Functions

How useful was this post?

Click on a star to charge per unit it!

As you constitute this post useful...

Follow us on social media!

We are sorry that this mail was not useful for yous!

Permit us better this mail service!

Tell us how nosotros can ameliorate this post?

I'k a passionate Cloud Infrastructure Architect with more than 15 years of experience in IT.

Any of my posts represent my personal experience and stance about the topic.

Source: https://hands-on.cloud/how-to-import-oracle-database-to-aws-using-oracle-s3-integration-feature/